1. When AI Finally Meets IoT

I’ve been building projects with the ESP32 for years — sensors, relays, and small control dashboards hosted on the board itself. Every project felt clever at first, but the pattern was always the same: a few endpoints, some MQTT messages, and a long list of hard-coded rules. It worked, but it was never really intelligent. The ESP32 could react, not reason.

Table of Contents

That gap between automation and understanding has bothered me for a long time. Every “smart” device still depends on static logic: if temperature > 25 °C, turn on fan 1; if door opens, send MQTT notification. None of this feels like genuine intelligence — it’s predictable cause-and-effect, not comprehension.

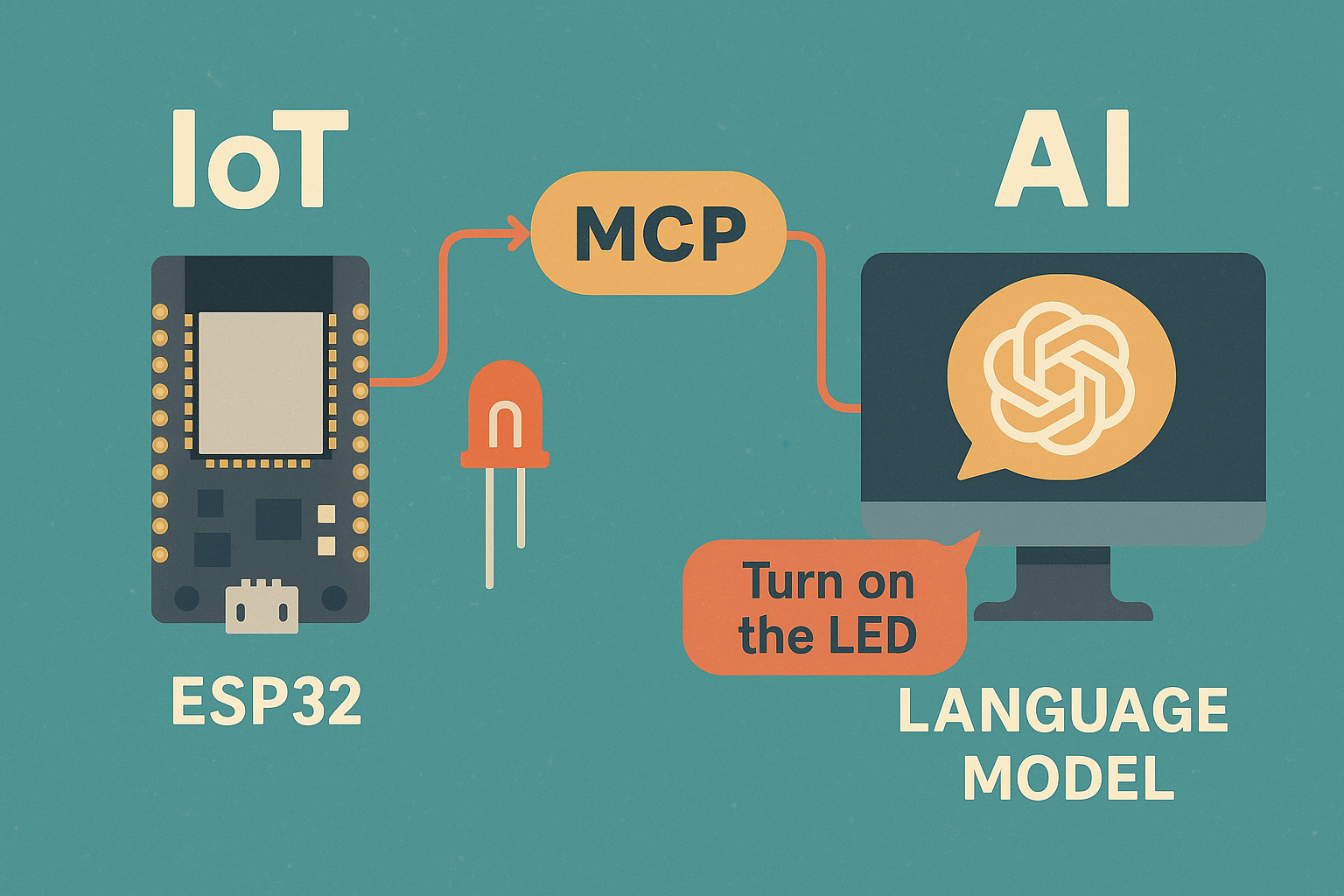

Then, early this year, I came across OpenAI’s Model Context Protocol (MCP) — a new standard that lets large language models (LLMs) communicate with external tools. That small announcement immediately changed how I looked at my microcontrollers. If an AI model could securely call APIs, query data, or run functions through MCP, why couldn’t it also toggle GPIOs or read a sensor?

That idea opened a new line of thought: connecting LLMs and IoT through a shared, standardized interface. Instead of designing one more “assistant skill” or chat command, we could expose the ESP32’s own capabilities — Wi-Fi, sensors, actuators — as callable MCP tools. The LLM could then reason in plain language, translating intent into real-world actions.

Imagine typing:

“Turn on GPIO 5 for five minutes, and let me know when the temperature goes above 25 °C.”

No firmware rebuilds, no custom endpoints, just an AI using the same protocol it already understands to control a real device.

Probably the best book about AI, LLMs and Agentic

Find a reason to add it to your learning backlog for 2026

This is an Amazon affiliate link, it won’t extra charge you.

That’s the journey this article explores: how ESP32 and MCP can finally work together, how this bridge changes what IoT development looks like, and why connecting a language model to real hardware might be the next major shift in embedded automation.

2. From Web Servers to AI Conversations

Long before LLMs entered the picture, the usual way to control an ESP32 was through a web server or MQTT topic. The workflow was always the same: you’d flash a firmware, open a browser, and hit an endpoint like:

http://esp32.local/gpio?pin=5&state=1The relay would click, and you’d get a neat sense of victory. But underneath, it was all mechanical — no understanding, no flexibility. If you wanted the ESP32 to respond to temperature, you had to write explicit logic:

if (temperature > 25) {

digitalWrite(fanPin, HIGH);

}Every scenario meant more code, more conditions, and another re-flash. The ESP32 was powerful, but it didn’t listen. It executed exactly what you told it, nothing more.

That model still defines how most IoT systems operate. Devices expose REST or MQTT endpoints; apps send commands. It’s structured, predictable, and completely dependent on predefined logic. There’s no reasoning layer between “intent” and “action.”

Now contrast that with an AI model like ChatGPT or Claude. These systems already interpret complex human language — they understand intent. If you tell an LLM “Turn on the lights when the room gets too dark,” it doesn’t need explicit rules; it can break that sentence into steps: read brightness, evaluate threshold, switch the relay.

So the real challenge becomes bridging these two worlds — the ESP32’s low-level, command-based reality and the LLM’s high-level, reasoning-driven understanding. The old web-server model isn’t built for that. It expects parameters, not sentences.

That’s where the Model Context Protocol (MCP) fits perfectly. Instead of asking the ESP32 to host yet another API, we can let the LLM communicate through a common protocol designed for tool invocation. MCP becomes the translator — it lets the AI reason naturally, and still reach down to the level of a GPIO pin.

In other words, the ESP32 doesn’t have to “understand” English. It just needs to expose its capabilities in a form the AI can use — and MCP gives us the standard language to do that.

3. The Missing Link — What MCP Brings to the Table

Every IoT developer has eventually faced the same question: how do I let something smarter control my devices?

For years, we’ve had REST APIs, WebSockets, and MQTT topics — reliable, structured interfaces that let one program talk to another. But none of them were designed for a system that reasons in natural language. They all assume the client already knows what commands exist and what parameters to send.

That’s why the Model Context Protocol (MCP) matters. It introduces a standard that sits between a large language model and the real world. With MCP, an AI doesn’t have to guess what an ESP32 can do — it can discover its capabilities, see which “tools” are available, and call them safely.

In practice, an MCP server is just a lightweight bridge that tells the AI:

“Here are the functions I can run for you —

gpio_write(pin, value),read_temperature(),wifi_scan()— and here’s how to use them.”

Whenever a model receives a user prompt like “Turn on the fan and check if Wi-Fi is connected,” it can map those requests to the available tools. MCP defines exactly how that exchange happens, in a predictable, machine-readable way.

For IoT, that’s a breakthrough. It means the ESP32 no longer needs to host a complicated REST API or be manually integrated with a cloud service. Instead, the model communicates through a single, standardized interface that can reach any connected device.

You can think of MCP as the USB standard for AI control — one plug that fits every kind of tool. In our case, that “tool” is a microcontroller sitting on your desk.

There are several ways to implement this bridge:

- On the ESP32 itself — a small HTTP or WebSocket server that exposes the MCP tools directly. Simple, but limited by memory and security concerns.

- On a local gateway — for example, a Raspberry Pi that runs a proper MCP server and forwards commands to the ESP32 over serial, BLE, or MQTT.

- In the cloud — a minimal worker (like a Cloudflare Worker) that connects the LLM to the device through a broker or web endpoint.

Each option has trade-offs. Running the MCP server on the ESP32 gives instant control but little room for safety or authentication. Hosting it on a gateway adds flexibility and proper logging. Deploying it in the cloud scales effortlessly — a single endpoint for all your devices, accessible by any AI model that supports MCP.

For this project, I chose to start simple: a cloud-hosted MCP bridge that talks to an ESP32 running existing firmware. No new protocol stack on the device, no firmware rebuilds — just a bridge that makes the old command system accessible to AI.

In the next section, we’ll explore the insight that made that possible — using the ESP32’s built-in command language as the foundation for its MCP interface.

4. Rethinking the ESP32 — The “Terminal Tunnel” Insight

The idea came unexpectedly. While reading through Espressif’s documentation, I remembered that the ESP32 already speaks a structured command language — the good old AT command set. Those simple text commands, used for decades in modems, still exist in the official ESP-AT firmware:

AT+GMR

OK

It’s easy to overlook them because we usually replace AT firmware with custom Arduino or ESP-IDF code. But those commands have a special property that fits perfectly with MCP: they are stateless, readable, and self-contained.

Best ESP32 starter kit, now on Amazon discount!

Could be the best gift for the Christmas! Start your tinkering right away.

This is an Amazon affiliate link, it won’t extra charge you.

That realization led to a simple question:

What if the ESP32 didn’t need a brand-new API at all?

What if an MCP server could just behave like a terminal tunnel — a pipe that forwards AI requests as AT commands and streams back the response?

That single insight simplified everything. Instead of building dozens of REST endpoints or MQTT handlers, I could let the LLM send existing commands directly through the MCP bridge.

For example:

{

"command": "AT+CWJAP=\"MyWiFi\",\"password\""

}

The server sends it to the board, the ESP32 connects to Wi-Fi, and returns OK. The LLM can immediately confirm the result and decide what to do next.

This approach has three major benefits:

- No new vocabulary needed. AT commands already cover most network, UART, and system tasks. The LLM only needs to learn how to call

at.send()and read the replies. - Perfect for debugging. You can monitor every exchange in plain text — there’s nothing hidden or binary.

- Compatible with existing tools. Any ESP32 board flashed with the official ESP-AT firmware can be used right away, no custom flashing required.

Of course, this method isn’t perfect. The standard ESP-AT firmware doesn’t expose all hardware features — there’s no easy way to toggle arbitrary GPIOs or talk over I²C unless you recompile it with Driver AT extensions. But even with the stock commands, you get a powerful proof-of-concept: an AI talking directly to the microcontroller’s firmware.

At this point, I had a working mental model:

- The LLM issues MCP tool calls.

- The MCP server forwards them as AT commands to the ESP32.

- The ESP32 replies in its own native format.

It was minimal, elegant, and very much in the spirit of IoT tinkering — using what already exists in a new way.

Still, I wanted something that could go beyond networking and talk to sensors or relays directly. That’s when I started looking into alternative firmwares, to see if any of them could serve as a more flexible “language” between the LLM and the hardware.

5. Exploring Better Firmware Candidates

Once the “terminal tunnel” concept was working in theory, the next logical step was to explore what other firmwares could serve as the language layer between the ESP32 and the LLM.

The AT firmware proved that the idea was viable, but it was still limited — it couldn’t toggle a pin, read a sensor, or trigger an event without extra compilation. So I started looking at what else existed in the ESP32 ecosystem.

ESP-AT — Structured, but Shallow

Espressif’s official AT firmware is still one of the most stable and well-documented options. It’s great for networking and diagnostics, but it was never meant to handle real-world control scenarios.

You can connect to Wi-Fi, open sockets, or check the chip version, but without enabling Driver AT features, you can’t say:

AT+GPIOWRITE=5,1and expect anything to happen.

It’s a clean foundation for a terminal interface, but to build a genuinely useful bridge between MCP and IoT, I needed more expressive firmware.

ESPHome — Flexible, but Overstructured

Next, I looked at ESPHome, which is essentially a configuration-based firmware generator. You describe what you want — sensors, actuators, triggers — in YAML, and it compiles a matching firmware.

ESPHome is powerful and polished, but it’s declarative. Once flashed, your logic is frozen inside the firmware. It doesn’t lend itself to dynamic AI control, because every change requires recompiling the configuration.

The MCP bridge would have to call a cloud API or modify the YAML file, not talk to the device directly. That felt too heavy for a real-time AI conversation.

Mongoose OS — Programmable, but Too Heavy

I also considered Mongoose OS, a solid platform for building connected devices in JavaScript or C. It supports OTA updates, MQTT, and cloud integration, and it can certainly talk to AI systems.

But Mongoose OS introduces its own scripting environment, configuration layers, and cloud backend. For this experiment, I wanted something closer to bare metal — a firmware that could respond to human-like commands instantly, without building an entire app stack around it.

Tasmota — Conversational by Nature

That’s when Tasmota stood out.

Originally built for ESP8266 smart plugs, Tasmota evolved into a full command-based environment for ESP32 as well. It speaks a clean, textual protocol — not AT commands, but short readable ones like:

Power1 ON

Backlog PulseTime1 400; Power1 1

Rule1 ON Tele-DS18B20#Temperature>25 DO Publish cmnd/notify/topic {"alert":"Temp high"} ENDONThose commands can be sent over serial, HTTP, or MQTT. They’re self-explanatory and map perfectly to LLM reasoning.

Unlike ESP-AT, Tasmota supports relays, PWM, I²C sensors, timers, and even internal rule scripting. In other words, it already behaves like a command shell for IoT — exactly what an AI model could understand.

Tasmota also solves one of the biggest issues I had with other firmwares: it can execute autonomous rules even without external control. That means the LLM doesn’t need to poll or constantly monitor; it can just configure rules and let the firmware handle local logic.

From an MCP perspective, this opens a huge opportunity. Instead of exposing hundreds of specialized functions, we can have a single MCP tool, something like:

{

"name": "tasmota.cmd",

"parameters": { "command": "string" }

}That one tool could execute any command the firmware supports. The LLM doesn’t need to know the syntax ahead of time; it can query the command list or learn by example.

With that realization, the direction became clear: I would use Tasmota on ESP32 as the IoT-side firmware and connect it to an MCP server running in the cloud. The LLM would speak through MCP, the server would send textual commands to Tasmota, and the device would respond — all in real time.

In the next section, we’ll walk through that proof-of-concept: an ESP32 running Tasmota, a relay, a temperature sensor, and an AI model that can control both with plain English.

6. The Proof-of-Concept — When the ESP32 Starts Listening

After weeks of sketching ideas and experimenting with different firmwares, I finally had a setup that worked — simple, elegant, and completely local. No clouds, no APIs, no MQTT brokers. Just a large language model talking to an ESP32 through a local MCP server, using nothing more than plain HTTP and human-readable commands.

Setup Overview

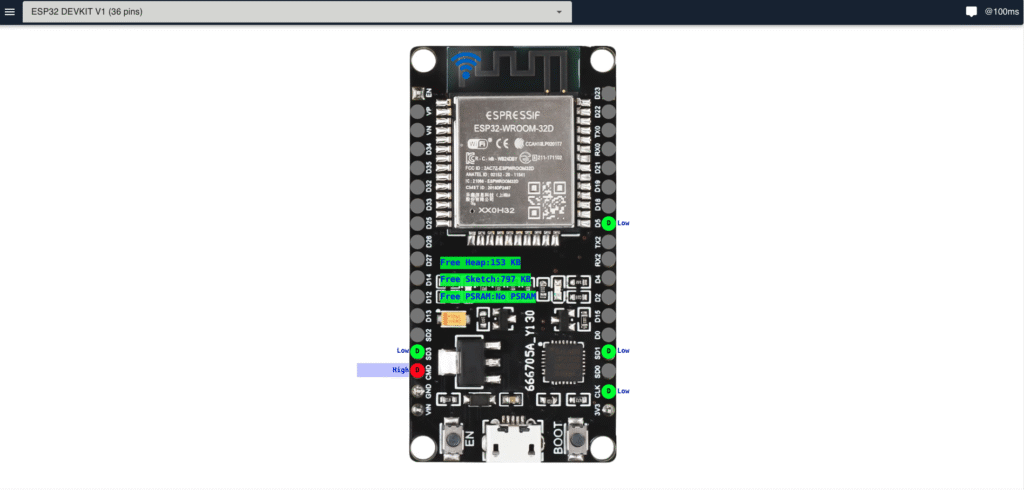

For this proof-of-concept, I used a regular ESP32 DevKit board with GPIO 5 mapped to Relay 1 in Tasmota. That’s the pin that drives the relay, and for this experiment, it became the one I asked the AI to control.

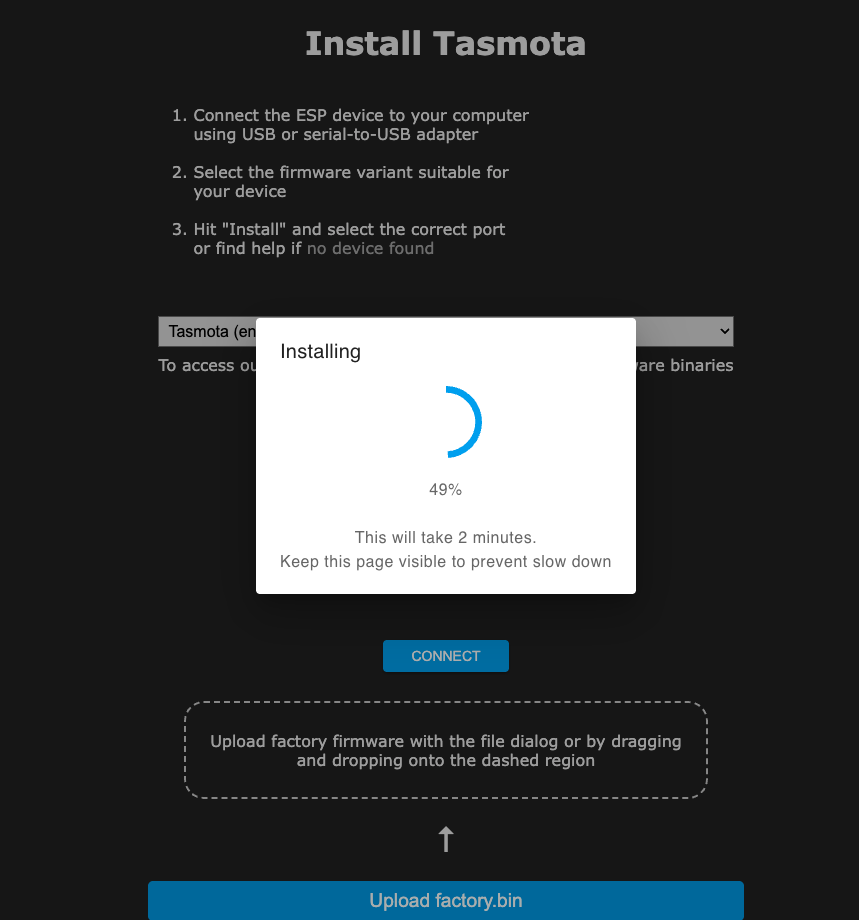

I flashed Tasmota32 Generic using the online Tasmotizer tool — the easiest method by far. Once the ESP32 joined my Wi-Fi, I opened its web dashboard and confirmed it responded to commands like:

http://192.168.1.212/cm?cmnd=Power1%201

The relay clicked on immediately. That was all I needed: proof that the firmware could be driven entirely over HTTP.

(For a detailed guide on flashing and configuring Tasmota on ESP32, check my earlier article Using Tasmota on ESP32.)

Building the MCP Bridge

Instead of running MCP on the ESP32, I built a small Node.js MCP HTTP server that lives on my laptop. Its job is simple: accept a command from the LLM, forward it to Tasmota over HTTP, and return the raw response.

You can find the entire project in my GitHub repository: https://github.com/tinkeriotops/esp32-mcp

Project layout and dependencies

mkdir esp32-mcp && cd esp32-mcp

npm init -y

npm i @modelcontextprotocol/sdk express zod

Create index.js with the MCP server (I hardcoded the device IP so the LLM doesn’t need it):

#!/usr/bin/env node

import express from "express";

import { randomUUID } from "node:crypto";

import { McpServer } from "@modelcontextprotocol/sdk/server/mcp.js";

import { StreamableHTTPServerTransport } from "@modelcontextprotocol/sdk/server/streamableHttp.js";

import { z } from "zod";

const PORT = process.env.PORT || 3000;

const FETCH_TIMEOUT_MS = 4000;

const DEVICE_IP = "192.168.1.212";

async function fetchWithTimeout(url, ms) {

const ctrl = new AbortController();

const t = setTimeout(() => ctrl.abort(), ms);

try {

const resp = await fetch(url, { signal: ctrl.signal });

const text = await resp.text();

return { ok: true, text, status: resp.status };

} catch (e) {

return { ok: false, error: e.message || String(e) };

} finally {

clearTimeout(t);

}

}

const server = new McpServer({ name: "tasmota-mcp", version: "0.4.0" });

// Single tool: send a Tasmota command (device IP is fixed)

server.registerTool(

"tasmota-cmd",

{

description:

"Send a Tasmota command to the ESP32 via HTTP. Example: Backlog PulseTime1 400; Power1 1",

inputSchema: z.object({

command: z.string().describe("Tasmota command string")

})

},

async ({ command }) => {

const url = `http://${DEVICE_IP}/cm?cmnd=${encodeURIComponent(command)}`;

const res = await fetchWithTimeout(url, FETCH_TIMEOUT_MS);

if (!res.ok) {

return {

content: [{ type: "text", text: `Error contacting device: ${res.error}` }],

isError: true

};

}

return { content: [{ type: "text", text: res.text }] };

}

);

// Lightweight docs as MCP resources (model can reference these)

const TASMOTA_QR_MD = `

# Tasmota Quick Reference (ESP32)

Power: \`Power1 1\` | \`Power1 0\` | \`Power1 TOGGLE\`

Timer: \`PulseTime1 <val>\` (use 100+seconds for >11.1s; 400 = 300s = 5 min)

Backlog: \`Backlog PulseTime1 400; Power1 1\`

HTTP form: \`http://<device-ip>/cm?cmnd=<url-encoded>\`

Docs: Commands https://tasmota.github.io/docs/Commands/

`.trim();

server.registerResource(

"tasmota://quickref",

{ title: "Tasmota Quick Reference", mimeType: "text/markdown" },

async () => ({

contents: [{ uri: "tasmota://quickref", mimeType: "text/markdown", text: TASMOTA_QR_MD }]

})

);

server.registerResource(

"https://tasmota.github.io/docs/Commands/",

{ title: "Tasmota Commands" },

async () => ({ contents: [{ uri: "https://tasmota.github.io/docs/Commands/" }] })

);

const transport = new StreamableHTTPServerTransport({

sessionIdGenerator: () => randomUUID(),

enableDnsRebindingProtection: true

});

await server.connect(transport);

const app = express();

app.all("/mcp", async (req, res) => {

try { await transport.handleRequest(req, res); }

catch (e) {

if (!res.headersSent) {

res.writeHead(500, { "Content-Type": "application/json" })

.end(JSON.stringify({ jsonrpc: "2.0", error: { code: -32000, message: `Internal error: ${e.message}` }, id: null }));

}

}

});

// quick health and a debug passthrough you can hit in a browser

app.get("/health", (req, res) => res.json({ ok: true }));

app.get("/debug", async (req, res) => {

const cmd = req.query.command;

if (!cmd) return res.status(400).json({ error: "missing command" });

const url = `http://${DEVICE_IP}/cm?cmnd=${encodeURIComponent(cmd)}`;

const out = await fetchWithTimeout(url, FETCH_TIMEOUT_MS);

res.json({ url, ...out });

});

app.listen(PORT, () => {

console.log(`MCP HTTP server: http://localhost:${PORT}/mcp`);

console.log(`Health: http://localhost:${PORT}/health`);

console.log(`Debug: http://localhost:${PORT}/debug?command=Power1%201`);

});

Run it:

node index.jsThe MCP server exposes:

/mcpfor the MCP protocol (used by Copilot/LLMs)/debugto test commands manually in a browser

Running It Locally

After completing the Node.js setup, I connected the MCP server directly to Visual Studio Code Copilot Chat, using its built-in support for external MCP servers.

This made the entire setup self-contained — no cloud, no tunnel, no broker.

Configuring the MCP Server in VS Code

Open your Copilot configuration file (usually found in .config/github-copilot/ or accessible via Add new MCP server in the Copilot panel) and add this entry:

{

"servers": {

"esp32-mcp": {

"url": "http://localhost:3000/mcp",

"type": "http"

}

},

"inputs": [

{

"id": "tasmota-command",

"type": "text",

"title": "Tasmota Command",

"description": "Enter the Tasmota command to send to the ESP32"

}

]

}

When you reload VS Code, Copilot automatically lists the new MCP server as esp32-mcp and exposes the tool tasmota-cmd.

Talking to the ESP32 from Copilot

Once registered, the relay can be controlled right from the Copilot chat window.

Typing:

/tasmota-cmd {"command": "Power1 1"}

instantly sends the Power1 1 command to Tasmota and the relay clicks ON.

Running:

/tasmota-cmd {"command": "Backlog PulseTime1 400; Power1 1"}

keeps it on for five minutes, then turns it off automatically — exactly as defined by Tasmota’s PulseTime feature.

All of this happens locally, in under half a second, without any API keys or cloud services.

It’s just Copilot → MCP → Node server → Tasmota → ESP32.

Why This Matters

This proof of concept shows that LLMs don’t need custom SDKs or IoT clouds to interact with real hardware.

As long as the firmware exposes human-readable commands and the server speaks MCP, the AI can reason about intent and execute physical actions directly.

The ESP32 stops being just a microcontroller; it becomes an AI-addressable endpoint — a small, local device that understands requests through the same universal protocol that powers modern language models.

7. From Commands to Understanding

When Code Turns into Conversation

The first time I watched the relay click after sending a short sentence, it felt different from running a script.

This was no longer about code execution; it was a dialogue. The ESP32 was responding to intent, not syntax.

The large language model interpreted my words, translated them into the proper Tasmota command, and executed it through MCP—all in real time.

Natural Language Becomes a Control Layer

With this setup, I can ask something simple like:

“Turn on the relay for five minutes.”

The model instantly maps that phrase to a valid Tasmota command:

Backlog PulseTime1 400; Power1 1

It doesn’t need me to remember command names or formatting rules. Because I exposed the Tasmota documentation as MCP resources, the model can check available options and syntax on its own. The AI isn’t guessing—it’s informed.

Reasoning Beyond Commands

The real value appears when requests get less direct.

If I ask, “Make sure the relay doesn’t stay on longer than five minutes,” the LLM reasons that the correct solution involves setting PulseTime1. It chooses and executes the right command chain.

This is no longer a simple device reacting to parameters—it’s a system capable of adapting logic to context.

Probably the best AI Prompt learning Path!

This book will rewrite your way of talking with generative AI. Its a must for any engineers.

This is an Amazon affiliate link, it won’t extra charge you.

Why MCP Changes the IoT Equation

Before MCP, connecting IoT devices to AI logic required custom integrations—API gateways, message brokers, or cloud services. Now, the same ESP32 can be addressed directly by any model that supports MCP.

The communication remains structured and secure, but the language is natural. The AI interprets what needs to happen, then issues the corresponding hardware command.

In effect, the ESP32 becomes an AI-accessible endpoint—a device that doesn’t need custom firmware or API code to understand what you mean. The LLM provides reasoning; the MCP bridge provides execution.

A Step Toward Understanding Devices

This project showed that intelligence in IoT doesn’t need more firmware features or hardware upgrades—it needs context.

MCP gives the ESP32 that context.

When a language model can interpret goals and translate them into structured device actions, the line between software reasoning and hardware execution starts to fade.

It’s a small but meaningful shift: from smart devices to understanding devices—hardware that finally listens in human terms.

8. Conclusion — A New Language for Devices

This project started as a simple question: what if an AI model could talk directly to a microcontroller?

Not through a cloud API or a proprietary dashboard, but in plain language — the way we already talk to software.

By combining ESP32, Tasmota, and the Model Context Protocol, that idea became a working reality.

The relay on my desk is no longer controlled by a fixed script; it responds to intent. When I tell the model to turn it on for five minutes, it knows what that means, finds the correct syntax, and performs the action through the MCP bridge.

What makes this approach different is its simplicity.

There’s no specialized integration, no platform lock-in, and no external dependency beyond what already exists.

Tasmota provides a transparent command interface, MCP defines a universal way for AIs to use tools, and the ESP32 does what it does best — execute.

The result isn’t another smart device; it’s a context-aware device.

A microcontroller that can understand why you’re asking for an action, not just how to perform it.

It’s proof that the future of IoT doesn’t have to depend on more complexity. Sometimes, all it takes is a common language — and now, thanks to MCP, both humans and machines finally share one.